Welcome to duiniwukenaihe's Blog!

这里记录着我的运维学习之路-

2021-04-26-Centos8-Failed to restart network.service_ Unit network.service not found

背景:

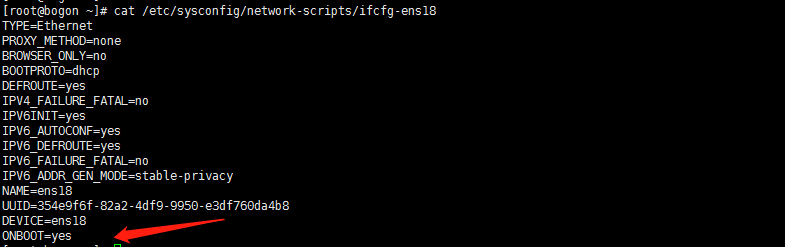

线上都是云商的服务器有小部分centos8但是这个的一般都没有重启过network服务的,依稀还能记得systemctl restart network命名。今天闲来无事内网搭建一台centos8服务器。安装完毕后修改network配置:ONBOOT=yes.设置系统启动时激活网卡:

vi /etc/sysconfig/network-scripts/ifcfg-ens18当然了 ifcfg后面的应该都是不一样的一般都是eth0

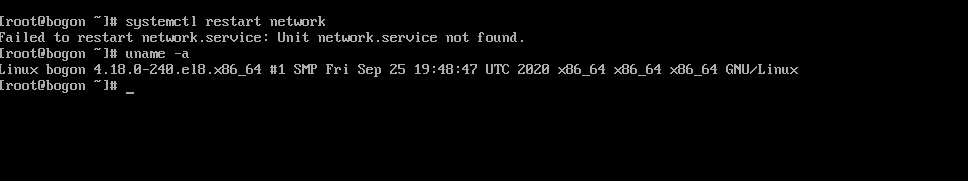

然后systemctl restart network.就出现了Failed to restart network.service: Unit network.service not found……

what?网络服务没有了?

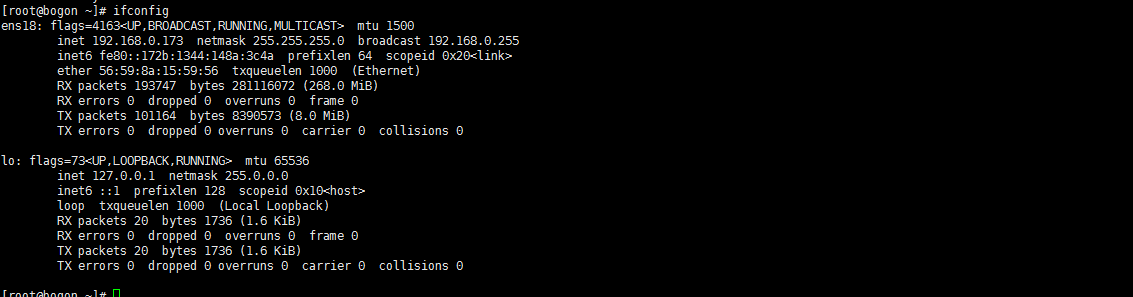

百度搜索下一下相关信息。详见:https://www.cnblogs.com/djlsunshine/p/9733182.html。centos8启动了nmcli的命令去做这些配置:

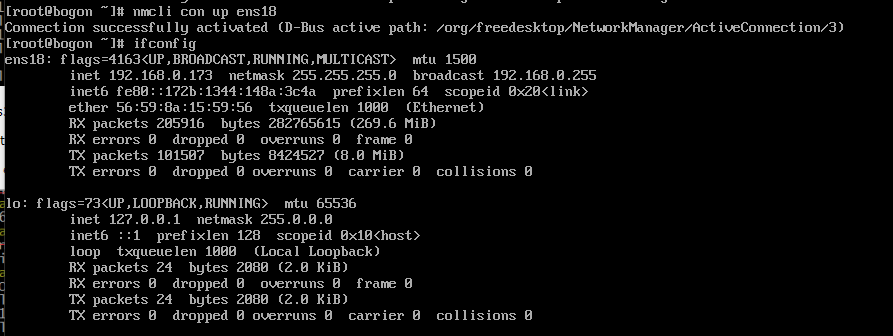

nmcli networking off nmcli networking on我就嘴简单的办法 off下然后启动下先激活网卡了:

一. 详细了解一下nmcli

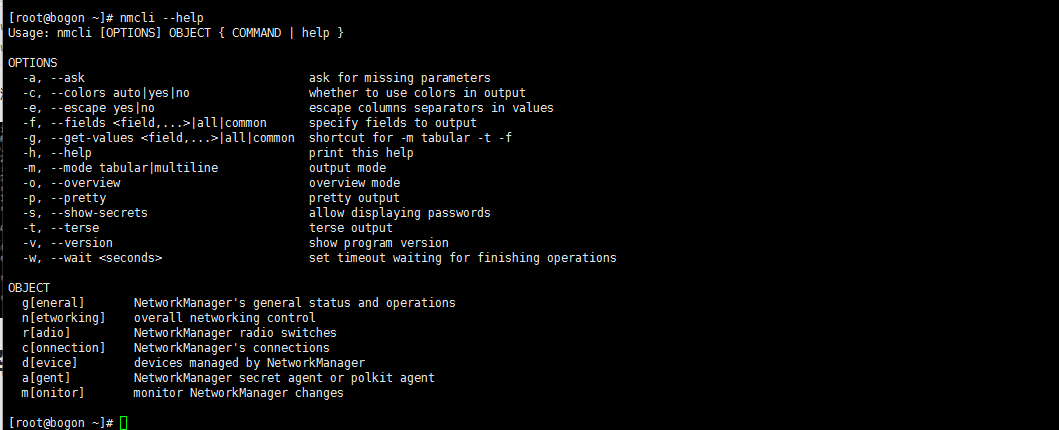

1.nmcli –help

了解一个命令的方式最简单的应该是看他的文档。linux中常用的就是–help,命令的基本格式:nmcli OPTIONS… ARGUMENTS…

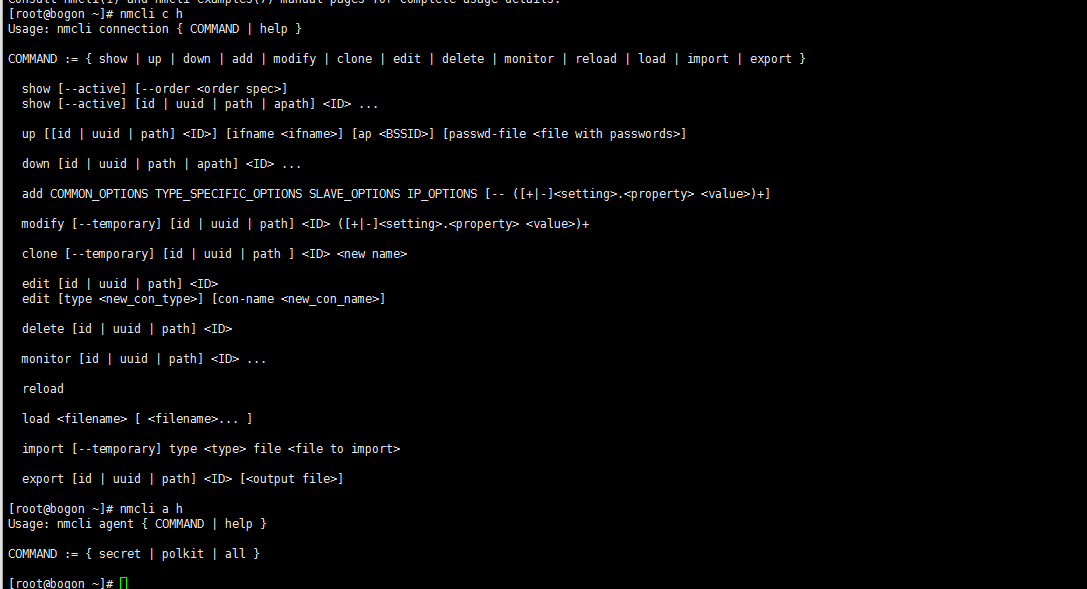

nmcli c h 查看connection帮助信息

不求甚解,还是研究下自己需要的吧……

2.个人觉得一些有用的命令:

1. nmcli c 查看连接信息

[root@bogon ~]# nmcli c NAME UUID TYPE DEVICE ens18 354e9f6f-82a2-4df9-9950-e3df760da4b8 ethernet ens182. nmcli d show ens18 显示网卡详细信息

[root@bogon ~]# nmcli d show ens18 GENERAL.DEVICE: ens18 GENERAL.TYPE: ethernet GENERAL.HWADDR: 56:59:8A:15:59:56 GENERAL.MTU: 1500 GENERAL.STATE: 100 (connected) GENERAL.CONNECTION: ens18 GENERAL.CON-PATH: /org/freedesktop/NetworkManager/ActiveConnection/1 WIRED-PROPERTIES.CARRIER: on IP4.ADDRESS[1]: 192.168.0.173/24 IP4.GATEWAY: 192.168.0.1 IP4.ROUTE[1]: dst = 0.0.0.0/0, nh = 192.168.0.1, mt = 100 IP4.ROUTE[2]: dst = 192.168.0.0/24, nh = 0.0.0.0, mt = 100 IP4.DNS[1]: 192.168.0.13 IP4.DNS[2]: 192.168.0.8 IP4.DOMAIN[1]: joychina.net IP6.ADDRESS[1]: fe80::172b:1344:148a:3c4a/64 IP6.GATEWAY: -- IP6.ROUTE[1]: dst = fe80::/64, nh = ::, mt = 100 IP6.ROUTE[2]: dst = ff00::/8, nh = ::, mt = 256, table=2553. 禁用启用网卡

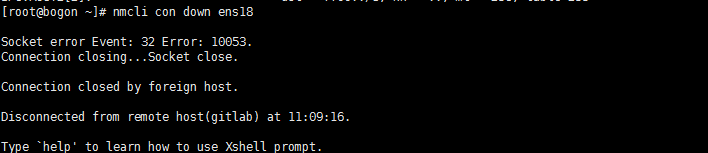

nmcli con down ens18 nmcli con up ens18

太复杂的我就不讲了..个人也不太关心……

二 . 由nmcli 延伸到centos8与centos7的一些变化

centos8与7的差异具体的可参照:https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html-single/considerations_in_adopting_rhel_8/index还是的看官方文档。也可以看下:https://blog.csdn.net/seaship/article/details/109292307

对于个人来说。最大的差距是dnf的命令….。当然了yum还是可以用的。个人还是用的yum,其他的差异就在工作中慢慢去看吧……

-

HTTP code 304引申出来的故事

背景:

公司开发环境内部开发。路由器做了设置只允许访问特定资源网站。自从做了限制后内网隔离网络环境出现特定资源pending现象。一直也没有做深入的研究。因为同一内网vlan中有能上网的小伙伴。一般情况下他手动去刷新一下就好了。最近频繁出现。记录一下排查问题过程和腾讯云cls日志服务的使用过程。

一 分析cdn日志

1. 分析日志http 状态码(咱们nginx中常用的status)

仔细研究了下cdn日志监控,http code如下(资源都是使用的腾讯云的,不做其他声明都为腾讯云服务):

查看监控详情。4XX基本是404忽略。看了一眼3XX的监控,

查看监控详情。4XX基本是404忽略。看了一眼3XX的监控,

-

关于helm安装jenkins指定label 为master节点Waiting.

helm 安装jenkins,做个测试 指定pipeline agent label为master节点:

pipeline{ //指定运行此流水线的节点 agent { label "master" } //管道运行选项 options { skipStagesAfterUnstable() } //流水线的阶段 stages{ //阶段1 获取代码 stage("CheckOut"){ steps{ script{ println("获取代码") echo '你好张鹏' sh "echo ${env.JOB_NAME}" } } } stage("Build"){ steps{ script{ println("运行构建") } } } } post { always{ script{ println("流水线结束后,经常做的事情") } } success{ script{ println("流水线成功后,要做的事情") } } failure{ script{ println("流水线失败后,要做的事情") } } aborted{ script{ println("流水线取消后,要做的事情") } } } }执行job时候等了好久,没有完成job。很诧异…就是几个简单的打印怎么会这样呢?当指定agent any时候很快就执行完成了。

-

Helm Jenkins 安装后时区问题的强迫症

helm 安装了jenkins构建应用的使用不知道有没有跟我出现同样问题的小伙伴:

build history中的时区timezone是utc 但是阶段视图的是正常的东八区上海时区….what….我改怎么办?个人有点强迫症。helm玩的不太好。看了一眼官方文档也没有找到修改时区的方法?难道要我去重新制作镜像 修改时区?讲真我有点后悔用helm了……

无意中google找到了一个插件https://chrome.google.com/webstore/detail/jenkins-local-timezone/omjcneepammbodkobeobihfpfngbdjoc

build history中的时区timezone是utc 但是阶段视图的是正常的东八区上海时区….what….我改怎么办?个人有点强迫症。helm玩的不太好。看了一眼官方文档也没有找到修改时区的方法?难道要我去重新制作镜像 修改时区?讲真我有点后悔用helm了……

无意中google找到了一个插件https://chrome.google.com/webstore/detail/jenkins-local-timezone/omjcneepammbodkobeobihfpfngbdjoc

安装插件如下:自己骗一下自己吧……

安装插件如下:自己骗一下自己吧……

以后要么有时间好好研究一下helm。要么还是yaml deployment的方式去安装吧。尽量选择自己擅长的方式。

以后要么有时间好好研究一下helm。要么还是yaml deployment的方式去安装吧。尽量选择自己擅长的方式。

-

日志服务CLS对接Grafana

背景:

腾讯云CLB(负载均衡)与CLS(日志服务)集成。然后看日志服务CLS专栏有一篇 CLS 对接 Grafana的博文。个人就也想尝试一下。当然了我的grafana是 Prometheus-oprator方式搭建在kubernetes集群中的。详见:https://cloud.tencent.com/developer/article/1807805。 下面开始记录下自己搭建的过程

一. Grafana中的配置

参照https://cloud.tencent.com/developer/article/1785751,但是饼形图的插件个人已经在Prometheus-oprator的搭建过程中安装了。现在重要的就是按照cls的插件和更改grafana的配置

1. 关于Grafana cls插件的安装

插件安装的过程还是简单的

###查看grafana pods的名称 kubectl get pods -n monitoring ###进入grafana容器 kubectl exec -it grafana-57d4ff8cdc-8hwsq bash -n monitoring ###下载cls插件并解压 cd /var/lib/grafana/plugins/ wget https://github.com/TencentCloud/cls-grafana-datasource/releases/latest/download/cls-grafana-datasource.zip unzip cls-grafana-datasource.zip 至于版本的要求验证就忽略了….因为我的granfa image版本是7.4.3,是已经确认过的……。至于如何让grafana插件加载生效呢?我一遍都是用最笨的方法delete一下pod……

至于版本的要求验证就忽略了….因为我的granfa image版本是7.4.3,是已经确认过的……。至于如何让grafana插件加载生效呢?我一遍都是用最笨的方法delete一下pod……kubectl delete pods grafana-57d4ff8cdc-8hwsq -n monitoring kubectl get pods -n monitoring 当然了这个时候如果进入grafana确认插件的安装成功与否一般的(grafana-cli plugins install通过官方源下载或者安装的)就可以了。但是安装cls这个插件是不可以的……为什么呢?强调一下腾讯云这个插件是一个非官方认证的插件。如果需要信任非官方的插件grafana是要开启配置参数的

当然了这个时候如果进入grafana确认插件的安装成功与否一般的(grafana-cli plugins install通过官方源下载或者安装的)就可以了。但是安装cls这个插件是不可以的……为什么呢?强调一下腾讯云这个插件是一个非官方认证的插件。如果需要信任非官方的插件grafana是要开启配置参数的2. 如何修改grafana的配置文件呢?

参照https://cloud.tencent.com/developer/article/1785751。部署完了插件的安装,是要修改grafana.ini的配置文件的。仔细观察一下prometheus-operator中 grafana的配置文件是默认的,并没有其他方式进行挂载,那该怎么办呢? 参照:https://blog.csdn.net/u010918487/article/details/110522133

将grafana的配置文件以configmap的方式进行挂载

具体流程:

1. 将grafana容器中的grafana.ini文件复制到本地

就是复习一下kubectl cp命令了:

kubectl cp grafana-57d4ff8cdc-ms4z9:/etc/grafana/grafana.ini /root/grafana/grafana.ini -n monitoring然后本地修改grafana.ini配置文件: 嗯: ;allow_loading_unsigned_plugins = 这一句配置修改为下面的这句

allow_loading_unsigned_plugins = tencent-cls-grafana-datasource图中没有删除上面那句只是为了方便演示:

这配置的作用就是开启tencent-cls-grafana-datasource这个非认证插件的加载。

这配置的作用就是开启tencent-cls-grafana-datasource这个非认证插件的加载。2. 将修改后的grafana.ini以configmap的方式挂载到kubernetes集群

kubectl create cm grafana-config --from-file=`pwd`/grafana.ini -n monitoring

3. 修改grafana-deployment.yaml挂载grafana-config

########volumeMounts部分新增以下内容: - mountPath: /etc/grafana name: grafana-config readOnly: true ########volumes部分新增以下内容: - configMap: name: grafana-config name: grafana-config

kubectl apply -f grafana-deployment.yaml -n monitoring等待grafana pod 重新部署成功:

3. grafana dashboard中的配置

1. 在左侧导航栏中,单击【Creat Dashboards】。

2. 在 Data Sources 页面,单击【Add data source】。

3. 选中【Tencent Cloud Log Service Datasource】,并按照如下说明配置数据源

引用自:https://cloud.tencent.com/developer/article/1785751 | 配置项 | | | — | — | | Security Credentials | SecretId、SecretKey:API 请求密钥,用于身份鉴权。可前往 API 密钥管理 获取地址。 | | Log Service Info | region:日志服务区域简称。例如,北京区域填写ap-beijing。完整的区域列表格式请参考 地域列表。 Topic:日志主题ID 。 |

嗯登陆腾讯云后台cam控制台https://console.cloud.tencent.com/cam。快速新建用户,新建一个名为cls的用户:登陆方式:编程访问,用户权限:QcloudCLSReadOnlyAccess,可接收消息类型全部就注释掉了。

嗯登陆腾讯云后台cam控制台https://console.cloud.tencent.com/cam。快速新建用户,新建一个名为cls的用户:登陆方式:编程访问,用户权限:QcloudCLSReadOnlyAccess,可接收消息类型全部就注释掉了。

-

Kubernetes 1.20.5 实现一个服务注册与发现到Nacos

背景:

参照 Kubernetes 1.20.5搭建nacos,在kubernetes集群中集成了nacos服务。想体验下服务的注册与发现功能。当然了 也想体验下sentinel各种的集成,反正就是spring cloud alibaba全家桶的完整体验啊……一步一步来吧哈哈哈。先来一下服务的注册与发现。 特别鸣谢https://blog.didispace.com/spring-cloud-alibaba-1/,程序猿DD的系列文章昨天无意间看到的,很不错,已经收藏。

一. maven打包构建应用IMAGE镜像

注意:以下maven打包构建流程基本copy自https://blog.didispace.com/spring-cloud-alibaba-1/。所以就不做代码的搬运工了。项目演示的代码都可以去程序猿DD大佬的github去下载。

关 于服务提供者与服务消费者alibaba-nacos-discovery-server为服务提供者 alibaba-nacos-discovery-client-common为服务消费者强调一下。

1. 构建alibaba-nacos-discovery-server image

嗯 还的强调一下服务提供者!这个是……

由于我个人打包测试是要在kubernets集群中使用,并且跟nacos服务在同一命名空间。连接nacos这里我就使用了内部服务名。package 打包 target目录下生成alibaba-nacos-discovery-server-0.0.1-SNAPSHOT.jar jar包。

由于我个人打包测试是要在kubernets集群中使用,并且跟nacos服务在同一命名空间。连接nacos这里我就使用了内部服务名。package 打包 target目录下生成alibaba-nacos-discovery-server-0.0.1-SNAPSHOT.jar jar包。

上传包到服务器改名app.jar ,复用Dockerfile

上传包到服务器改名app.jar ,复用DockerfileFROM openjdk:8-jdk-alpine VOLUME /tmp ADD app.jar app.jar ENTRYPOINT ["java","-Djava.security.egd=file:/dev/./urandom","-jar","/app.jar"]打包镜像,将镜像上传到镜像仓库(个人使用了腾讯云的私有仓库,当然了也可以自己安装harbor做仓库)。

mv alibaba-nacos-discovery-server-0.0.1-SNAPSHOT.jar app.jar docker build -t ccr.ccs.tencentyun.com/XXXX/alibaba-nacos-discovery-server:0.1 . docker push ccr.ccs.tencentyun.com/XXXX/alibaba-nacos-discovery-server:0.1

2 . 构建libaba-nacos-discovery-client-common image

基本步骤与上一步一样:

二. Kubernetes集群部署服务

1. 部署alibaba-nacos-discovery-server

cat alibaba-nacos-discovery-server.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: alibaba-nacos-discovery-server spec: replicas: 1 strategy: rollingUpdate: maxSurge: 1 maxUnavailable: 1 selector: matchLabels: app: alibaba-nacos-discovery-server template: metadata: labels: app: alibaba-nacos-discovery-server spec: containers: - name: talibaba-nacos-discovery-server image: ccr.ccs.tencentyun.com/XXXX/alibaba-nacos-discovery-server:0.1 ports: - containerPort: 8001 resources: requests: memory: "256M" cpu: "250m" limits: memory: "512M" cpu: "500m" imagePullSecrets: - name: tencent --- apiVersion: v1 kind: Service metadata: name: alibaba-nacos-discovery-server labels: app: alibaba-nacos-discovery-server spec: ports: - port: 8001 protocol: TCP targetPort: 8001 selector: app: alibaba-nacos-discovery-serverkubectl apply -f alibaba-nacos-discovery-server.yaml -n nacoskubectl get pods -n nacos

登陆nacos管理地址可以发现服务管理-服务列表有alibaba-nacos-discovery-server注册

登陆nacos管理地址可以发现服务管理-服务列表有alibaba-nacos-discovery-server注册

2. 部署alibaba-nacos-discovery-client-common

消费者 消费者 消费者。自己老记不住 cat alibaba-nacos-discovery-client-common.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: alibaba-nacos-discovery-common spec: replicas: 1 strategy: rollingUpdate: maxSurge: 1 maxUnavailable: 1 selector: matchLabels: app: alibaba-nacos-discovery-common template: metadata: labels: app: alibaba-nacos-discovery-common spec: containers: - name: talibaba-nacos-discovery-common image: ccr.ccs.tencentyun.com/XXXX/alibaba-nacos-discovery-client-common:0.1 ports: - containerPort: 9000 resources: requests: memory: "256M" cpu: "250m" limits: memory: "512M" cpu: "500m" imagePullSecrets: - name: tencent --- apiVersion: v1 kind: Service metadata: name: alibaba-nacos-discovery-common labels: app: alibaba-nacos-discovery-common spec: ports: - port: 9000 protocol: TCP targetPort: 9000 selector: app: alibaba-nacos-discovery-commonkubectl apply -f alibaba-nacos-discovery-client-common.yaml -n nacos这个时候登陆nacos管理页面应该是有alibaba-nacos-discovery-client-common服务注册了。

3. 更改alibaba-nacos-discovery-server副本数验证服务

仔细看了下https://blog.didispace.com/spring-cloud-alibaba-1/ 他启动了两个alibaba-nacos-discovery-server实例,嗯 两个服务提供者。好验证一下nacos将服务上线下线的基础功能。正好我也扩容一下顺便补习一下scale命令

kubectl scale deployment/alibaba-nacos-discovery-server --replicas=2 -n nacos 前面访问alibaba-nacos-discovery-server服务都是只有10.0.5.120一个ip,deployment扩容完成后访问alibaba-nacos-discovery-client-common(消费者)放回了两个服务提供者轮训方式的两个ip。

前面访问alibaba-nacos-discovery-server服务都是只有10.0.5.120一个ip,deployment扩容完成后访问alibaba-nacos-discovery-client-common(消费者)放回了两个服务提供者轮训方式的两个ip。

观察nacos管理web页面,服务列表alibaba-nacos-discovery-server实例数量,健康实例都已经变成2:

观察nacos管理web页面,服务列表alibaba-nacos-discovery-server实例数量,健康实例都已经变成2:

点开alibaba-nacos-discovery-server服务详情。嗯集群下出现了两个实例的具体信息。

点开alibaba-nacos-discovery-server服务详情。嗯集群下出现了两个实例的具体信息。

看到集群实例配置这里有个下线的功能想体验一下:

看到集群实例配置这里有个下线的功能想体验一下:

下线了10.5.208的实例 再访问一下。发现确实只有10.0.5.120节点了:

下线了10.5.208的实例 再访问一下。发现确实只有10.0.5.120节点了:

恢复实例访问服务提供者10.5.208。点击上线,不做其他设置。继续访问消费者应用应该是两个ip的轮询权重一样的:

恢复实例访问服务提供者10.5.208。点击上线,不做其他设置。继续访问消费者应用应该是两个ip的轮询权重一样的:

简单记录 简单记录。积少成多……..

简单记录 简单记录。积少成多……..

-

Kubernetes 1.20.5 Sentinel之alibaba-sentinel-rate-limiting服务测试

背景:

参照上文 Kubernetes 1.20.5搭建sentinel 。想搭建一个服务去测试下sentinel服务的表现形式。当然了其他服务还没有集成 就找了一个demo,参照:https://blog.csdn.net/liuhuiteng/article/details/107399979。

一. Sentinel之alibaba-sentinel-rate-limiting服务测试

1.maven构建测试jar包

参照https://gitee.com/didispace/SpringCloud-Learning/tree/master/4-Finchley/alibaba-sentinel-rate-limiting

就修改了连接setinel dashboard的连接修改为kubernetes集群中setinel服务的service ip与8858端口。

maven打包jar包。

就修改了连接setinel dashboard的连接修改为kubernetes集群中setinel服务的service ip与8858端口。

maven打包jar包。

2. 构建image镜像

其实 idea可以直接打出来image镜像的。以后研究吧。复用了一下原来做其他springboot的Dockerfile。

cat Dockerfile

FROM openjdk:8-jdk-alpine VOLUME /tmp ADD app.jar app.jar ENTRYPOINT ["java","-Djava.security.egd=file:/dev/./urandom","-jar","/app.jar"]注:我mv了下把打出来的jar包改名为app.jar了…也可以在pom.xml文件中自定义的修改了……

docker build -t ccr.ccs.tencentyun.com/XXXX/testjava:0.3 . docker push ccr.ccs.tencentyun.com/XXXXX/testjava:0.33. 部署测试服务

cat alibaba-sentinel-rate-limiting.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: test spec: replicas: 1 strategy: rollingUpdate: maxSurge: 1 maxUnavailable: 1 selector: matchLabels: app: test template: metadata: labels: app: test spec: containers: - name: test image: ccr.ccs.tencentyun.com/XXXX/testjava:0.3 ports: - containerPort: 8001 resources: requests: memory: "256M" cpu: "250m" limits: memory: "512M" cpu: "500m" imagePullSecrets: - name: tencent --- apiVersion: v1 kind: Service metadata: name: test labels: app: test spec: ports: - port: 8001 protocol: TCP targetPort: 8001 selector: app: test注意:服务就命名为test了 ……

kubectl apply -f alibaba-sentinel-rate-limiting.yaml -n nacos4. 访问 alibaba-sentinel-rate-limiting服务,观察sentinel dashboard

访问alibaba-sentinel-rate-limiting服务,内部测试就不用ingress对外暴露了。直接内部CluserIP访问了

$kubectl get svc -n nacos NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE mysql ClusterIP 172.254.43.125 <none> 3306/TCP 2d9h nacos-headless ClusterIP None <none> 8848/TCP,7848/TCP 2d8h sentinel ClusterIP 172.254.163.115 <none> 8858/TCP,8719/TCP 26h test ClusterIP 172.254.143.171 <none> 8001/TCP 3h5mkubernetes 服务器集群内部 curl 172.254.143.171:8001/hello curl了十次…… 登陆https://sentinel.saynaihe.com/观察

二. 测试下setinel功能

1. 流控规则

嗯访问了下还行实时监控是有记录了 随手做个流控阈值测试下qps就设置1吧

嗯效果如下。哈哈哈哈

嗯效果如下。哈哈哈哈

嗯 有失败的了。

嗯 有失败的了。

但是发现诡异的是我的这个应该是测试的没有配置存储什么的。经常的实时监控就没有了…..不知道是不是没有配置存储的原因。

但是发现诡异的是我的这个应该是测试的没有配置存储什么的。经常的实时监控就没有了…..不知道是不是没有配置存储的原因。

2. 降级规则

降级规则就不测试了 觉得都一样…简单

3. 热点

4. 系统规则

5. 授权规则

6. 集群流控

机器列表。嗯 我想把pod变成两个试试 看看是不是我理解的这样会变成2个?

机器列表。嗯 我想把pod变成两个试试 看看是不是我理解的这样会变成2个?

重温一下kubectl scale –help

重温一下kubectl scale –helpkubectl scale deployment/test --replicas=2 -n nacos

对于我来说基本效果已经达到…其他的继续研究吧

对于我来说基本效果已经达到…其他的继续研究吧

-

Kubernetes 1.20.5搭建sentinel

背景:

后端程序团队准备一波流springcloud架构,配置中心用了阿里开源的nacos。不出意外的要高一个sentinel做测试了…..。做一个demo开始搞一下吧。 kubernetes上面搭建sentinel的案例较少。看下眼还是springcloud全家桶的多点。阿里开源的这一套还是少点。百度或者Google搜索 sentinel基本出来的都是redis哨兵模式…有点忧伤。 注:搭建方式可以参照:https://blog.csdn.net/fenglailea/article/details/92436337?utm_term=k8s%E9%83%A8%E7%BD%B2Sentinel&utm_medium=distribute.pc_aggpage_search_result.none-task-blog-2~all~sobaiduweb~default-0-92436337&spm=3001.4430。

一. 搭建sentinel-dashboard:

1.自定义创建sentinel-dashboard image镜像

嗯 很理所当然了不喜欢用docker镜像这名词了。还是用image吧。上面引用的博客中1.6.1的版本吧 ?搭建跑了下犯了强迫症,最新的版本是1.8.1根据foxiswho大佬的配置文件进行修改下镜像。 vim Dockerfile

FROM openjdk:11.0.3-jdk-stretch MAINTAINER foxiswho@gmail.com ARG version ARG port # sentinel version ENV SENTINEL_VERSION ${version:-1.8.1} #PORT ENV PORT ${port:-8858} ENV JAVA_OPT="" # ENV PROJECT_NAME sentinel-dashboard ENV SERVER_HOST localhost ENV SERVER_PORT 8858 ENV USERNAME sentinel ENV PASSWORD sentinel # sentinel home ENV SENTINEL_HOME /opt/ ENV SENTINEL_LOGS /opt/logs #tme zone RUN rm -rf /etc/localtime \ && ln -s /usr/share/zoneinfo/Asia/Shanghai /etc/localtime # create logs RUN mkdir -p ${SENTINEL_LOGS} # get the version #RUN cd / \ # && wget https://github.com/alibaba/Sentinel/releases/download/${SENTINEL_VERSION}/sentinel-dashboard-${SENTINEL_VERSION}.jar -O sentinel-dashboard.jar \ # && mv sentinel-dashboard.jar ${SENTINEL_HOME} \ # && chmod -R +x ${SENTINEL_HOME}/*jar # test file COPY sentinel-dashboard.jar ${SENTINEL_HOME} # add scripts COPY scripts/* /usr/local/bin/ RUN chmod +x /usr/local/bin/docker-entrypoint.sh \ && ln -s /usr/local/bin/docker-entrypoint.sh /opt/docker-entrypoint.sh # RUN chmod -R +x ${SENTINEL_HOME}/*jar VOLUME ${SENTINEL_LOGS} WORKDIR ${SENTINEL_HOME} EXPOSE ${PORT} 8719 CMD java ${JAVA_OPT} -jar sentinel-dashboard.jar ENTRYPOINT ["docker-entrypoint.sh"]注: 大佬的Dockerfile中 暴露的端口是8200,由于看sentinel对外暴露端口都是8518我就把dockerfile修改了。然后把https://github.com/alibaba/Sentinel/releases下载了1.8.1版本的jar包改名为sentinel-dashboard.jar 放在当前目录

dc目录可以忽略,原项目copy自https://github.com/foxiswho/docker-sentinel

cat scripts/docker-entrypoint.sh

dc目录可以忽略,原项目copy自https://github.com/foxiswho/docker-sentinel

cat scripts/docker-entrypoint.sh#!/bin/bash # Licensed to the Apache Software Foundation (ASF) under one or more # contributor license agreements. See the NOTICE file distributed with # this work for additional information regarding copyright ownership. # The ASF licenses this file to You under the Apache License, Version 2.0 # (the "License"); you may not use this file except in compliance with # the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. #=========================================================================================== # Java Environment Setting #=========================================================================================== error_exit () { echo "ERROR: $1 !!" exit 1 } [ ! -e "$JAVA_HOME/bin/java" ] && JAVA_HOME=$HOME/jdk/java [ ! -e "$JAVA_HOME/bin/java" ] && JAVA_HOME=/usr/java [ ! -e "$JAVA_HOME/bin/java" ] && error_exit "Please set the JAVA_HOME variable in your environment, We need java(x64)!" export JAVA_HOME export JAVA="$JAVA_HOME/bin/java" export BASE_DIR=$(dirname $0)/.. export CLASSPATH=.:${BASE_DIR}/conf:${CLASSPATH} #=========================================================================================== # JVM Configuration #=========================================================================================== # Get the max heap used by a jvm, which used all the ram available to the container. if [ -z "$MAX_POSSIBLE_HEAP" ] then MAX_POSSIBLE_RAM_STR=$(java -XX:+UnlockExperimentalVMOptions -XX:MaxRAMFraction=1 -XshowSettings:vm -version 2>&1 | awk '/Max\. Heap Size \(Estimated\): [0-9KMG]+/{ print $5}') MAX_POSSIBLE_RAM=$MAX_POSSIBLE_RAM_STR CAL_UNIT=${MAX_POSSIBLE_RAM_STR: -1} if [ "$CAL_UNIT" == "G" -o "$CAL_UNIT" == "g" ]; then MAX_POSSIBLE_RAM=$(echo ${MAX_POSSIBLE_RAM_STR:0:${#MAX_POSSIBLE_RAM_STR}-1} `expr 1 \* 1024 \* 1024 \* 1024` | awk '{printf "%d",$1*$2}') elif [ "$CAL_UNIT" == "M" -o "$CAL_UNIT" == "m" ]; then MAX_POSSIBLE_RAM=$(echo ${MAX_POSSIBLE_RAM_STR:0:${#MAX_POSSIBLE_RAM_STR}-1} `expr 1 \* 1024 \* 1024` | awk '{printf "%d",$1*$2}') elif [ "$CAL_UNIT" == "K" -o "$CAL_UNIT" == "k" ]; then MAX_POSSIBLE_RAM=$(echo ${MAX_POSSIBLE_RAM_STR:0:${#MAX_POSSIBLE_RAM_STR}-1} `expr 1 \* 1024` | awk '{printf "%d",$1*$2}') fi MAX_POSSIBLE_HEAP=$[MAX_POSSIBLE_RAM/4] fi # Dynamically calculate parameters, for reference. Xms=$MAX_POSSIBLE_HEAP Xmx=$MAX_POSSIBLE_HEAP Xmn=$[MAX_POSSIBLE_HEAP/2] # Set for `JAVA_OPT`. JAVA_OPT="${JAVA_OPT} -server " if [ x"${MAX_POSSIBLE_HEAP_AUTO}" = x"auto" ];then JAVA_OPT="${JAVA_OPT} -Xms${Xms} -Xmx${Xmx} -Xmn${Xmn}" fi #-XX:+UseCMSCompactAtFullCollection #JAVA_OPT="${JAVA_OPT} -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=70 -XX:+CMSParallelRemarkEnabled -XX:SoftRefLRUPolicyMSPerMB=0 -XX:+CMSClassUnloadingEnabled -XX:SurvivorRatio=8 " #JAVA_OPT="${JAVA_OPT} -verbose:gc -Xloggc:/dev/shm/rmq_srv_gc.log -XX:+PrintGCDetails" #JAVA_OPT="${JAVA_OPT} -XX:-OmitStackTraceInFastThrow" #JAVA_OPT="${JAVA_OPT} -XX:-UseLargePages" #JAVA_OPT="${JAVA_OPT} -Djava.ext.dirs=${JAVA_HOME}/jre/lib/ext:${BASE_DIR}/lib" #JAVA_OPT="${JAVA_OPT} -Xdebug -Xrunjdwp:transport=dt_socket,address=9555,server=y,suspend=n" JAVA_OPT="${JAVA_OPT} -Dserver.port=${PORT} " JAVA_OPT="${JAVA_OPT} -Dcsp.sentinel.log.dir=${SENTINEL_LOGS} " JAVA_OPT="${JAVA_OPT} -Djava.security.egd=file:/dev/./urandom" JAVA_OPT="${JAVA_OPT} -Dproject.name=${PROJECT_NAME} " JAVA_OPT="${JAVA_OPT} -Dcsp.sentinel.app.type=1 " JAVA_OPT="${JAVA_OPT} -Dsentinel.dashboard.auth.username=${USERNAME} " JAVA_OPT="${JAVA_OPT} -Dsentinel.dashboard.auth.password=${PASSWORD} " JAVA_OPT="${JAVA_OPT} -Dcsp.sentinel.dashboard.server=${SERVER_HOST:-localhost}:${SERVER_PORT:-8558} " JAVA_OPT="${JAVA_OPT} ${JAVA_OPT_EXT}" JAVA_OPT="${JAVA_OPT} -jar sentinel-dashboard.jar " JAVA_OPT="${JAVA_OPT} -cp ${CLASSPATH}" echo "JAVA_OPT============" echo "JAVA_OPT============" echo "JAVA_OPT============" echo $JAVA_OPT $JAVA ${JAVA_OPT} $@依然抄的大佬的启动文件。但是注意…大佬这里也写死了端口8200….记得修改

嗯 开始build镜像

docker build -t ccr.ccs.tencentyun.com/xxxx/sentinel:1.8.1 . docker push ccr.ccs.tencentyun.com/xxxx/sentinel:1.8.1 对了 我是不是可以用crictl命令操作一下?crictl ctr 不支持build……..后续是不是可以考虑用

buildkit 构建镜像?

对了 我是不是可以用crictl命令操作一下?crictl ctr 不支持build……..后续是不是可以考虑用

buildkit 构建镜像?2. 在kubernetes集群中部署sentinel

在Kubernetes 1.20.5搭建nacos中建立了nacos namespace. sentinel也部署在sentinel命名空间了没有做太多的复杂配置。这边都是简单跑起来的demo,先跑通流程

1. 部署configmap

cat config.yaml

apiVersion: v1 kind: ConfigMap metadata: name: sentinel-cm data: sentinel.server.host: "sentinel" sentinel.server.port: "8858" sentinel.dashboard.auth.username: "sentinel111111" sentinel.dashboard.auth.password: "W3$ti$aifffdfGEqjf.xOkZ"注:这里的sentinel.server.host 我这里直接写的是服务名,还没有出现什么异常启动了。正常是不是该输入一个fqdn? sentinel.nacos.svc.cluster.local 这样的呢?(当然了 我的domain不是cluster.local).

kubectl apply -f config.yaml -n nacos2 部署 sentinel statefulset

cat pod.yaml

apiVersion: apps/v1 kind: StatefulSet metadata: name: sentinel labels: app: sentinel spec: serviceName: sentinel replicas: 1 selector: matchLabels: app: sentinel template: metadata: labels: app: sentinel spec: containers: - name: sentinel image: ccr.ccs.tencentyun.com/XXXX/sentinel:1.8.1 imagePullPolicy: IfNotPresent resources: limits: cpu: 450m memory: 1024Mi requests: cpu: 400m memory: 1024Mi env: - name: TZ value: Asia/Shanghai - name: JAVA_OPT_EXT value: "-Dserver.servlet.session.timeout=7200 " - name: SERVER_HOST valueFrom: configMapKeyRef: name: sentinel-cm key: sentinel.server.host - name: SERVER_PORT valueFrom: configMapKeyRef: name: sentinel-cm key: sentinel.server.port - name: USERNAME valueFrom: configMapKeyRef: name: sentinel-cm key: sentinel.dashboard.auth.username - name: PASSWORD valueFrom: configMapKeyRef: name: sentinel-cm key: sentinel.dashboard.auth.password ports: - containerPort: 8858 - containerPort: 8719 volumeMounts: - name: vol-log mountPath: /opt/logs volumes: - name: vol-log hostPath: path: /www/k8s/foxdev/sentinel/logs type: Directorykubectl apply -f pod.yaml -n nacos注意:偷懒了 volumes挂载不想搞了本来就是测试的 在三个work节点都搞了/www/k8s/foxdev/sentinel/logs目录.直接复制foxiswho的配置了基本。

3. 部署service服务

cat svc.yaml

apiVersion: v1 kind: Service metadata: name: sentinel labels: app: sentinel spec: type: ClusterIP ports: - port: 8858 targetPort: 8858 name: web - port: 8719 targetPort: 8719 name: api selector: app: sentinelkubectl apply -f svc -n nacos4. 验证服务是否正常

kubectl get pod,svc -n nacos kubectl logs -f sentinel-0 -n nacos

5. ingress 对外暴露sentinel dashboard

cat ingress.yaml

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: sentinel-http namespace: nacos annotations: kubernetes.io/ingress.class: traefik traefik.ingress.kubernetes.io/router.entrypoints: web spec: rules: - host: sentinel.saynaihe.com http: paths: - pathType: Prefix path: / backend: service: name: sentinel port: number: 8858输入 configmap中设置的用户名密码

进入控制台:

进入控制台:

实时监控,请求链路 流控规则和降级规则这几个名词个人就很喜欢的样子…..后面再去研究下使用。

实时监控,请求链路 流控规则和降级规则这几个名词个人就很喜欢的样子…..后面再去研究下使用。

-

Kubernetes1.20.5 gitlab13.6 update13.10

安装的时候gitlab版本用的13.6版本….貌似最近有bug了 看好多人都建议升级到13.10版本后 流氓了一下 只修改了giltlab.yaml中image的tag 然后

kuberctl apply -f gitlab.yaml 然后

然后kubectl describe pods gitlab-b9d95f784-dkgbj -n kube-ops 嗯 pvc挂不上啊 这样…最笨的方法吧。删除重建一下

嗯 pvc挂不上啊 这样…最笨的方法吧。删除重建一下kubectl delete -f gitlab.yaml -n kube-ops kubectl apply -f gitlab.yaml -n kube-ops kubectl describe pods gitlab-b9d95f784-7h8dt -n kube-ops

登陆web验证一下….

登陆web验证一下….

注:正常的版本升级还是参照这里的文档 https://github.com/sameersbn/docker-gitlab。

注:正常的版本升级还是参照这里的文档 https://github.com/sameersbn/docker-gitlab。

我的这个gitlab首先基本是空的,而且也是小版本升级。就忽略了。尤其是牵扯到数据的,别想着走什么捷径……安全第一哈哈哈哈。也警戒以下自己。莫取巧。

我的这个gitlab首先基本是空的,而且也是小版本升级。就忽略了。尤其是牵扯到数据的,别想着走什么捷径……安全第一哈哈哈哈。也警戒以下自己。莫取巧。

-

Kubernetes 1.20.5搭建nacos

前言:

后端小伙伴们准备搞pvp对战服务。配置中心选型选择了阿里云的nacos服务。参照https://nacos.io/zh-cn/docs。由于业务规划都在kubernetes集群上,就简单参照https://nacos.io/zh-cn/docs/use-nacos-with-kubernetes.html做了一个demo让他们先玩一下。

关于nacos:

参照:https://nacos.io/zh-cn/docs/what-is-nacos.html

- **服务发现和健康监测:** 支持基于 DNS 和基于 RPC 的服务发现。服务提供者使用 原生SDK、OpenAPI、或一个独立的Agent TODO注册 Service 后,服务消费者可以使用DNS TODO 或HTTP&API查找和发现服务。提供对服务的实时的健康检查,阻止向不健康的主机或服务实例发送请求

- **动态配置服务:** Nacos 提供配置统一管理功能,能够帮助我们将配置以中心化、外部化和动态化的方式管理所有环境的应用配置和服务配置。

- **动态 DNS 服务:** Nacos 支持动态 DNS 服务权重路由,能够让我们很容易地实现中间层负载均衡、更灵活的路由策略、流量控制以及数据中心内网的简单 DNS 解析服务。

- **服务及其元数据管理:** Nacos 支持从微服务平台建设的视角管理数据中心的所有服务及元数据,包括管理服务的描述、生命周期、服务的静态依赖分析、服务的健康状态、服务的流量管理、路由及安全策略、服务的 SLA 以及最首要的 metrics 统计数据。

- 嗯 还有更多的特性列表……

一. nacos on kubernetes

基本的安装过程参照:https://github.com/nacos-group/nacos-k8s/blob/master/README-CN.md

1. 创建命名空间

**嗯当然了第一步还是先创建一个搭建nacos服务的namespace了:**

kubectl create ns nacos2. git clone 仓库

git clone https://github.com/nacos-group/nacos-k8s.git基本都会因为网络原因无法clone,我是直接下载包到本地 然后上传到服务器了。

3. 部署初始化mysql服务器

生产的话肯定是用云商的云数据库了,比如腾讯云的rds服务。由于只是给程序整一个demo让他们玩一下,就讲mysql 整合在kubernetes中了。**个人存储storageclass都是使用默认的腾讯云的cbs-csi。**

**cd /nacos-k8s/mysql(当然了我是上传的目录路径是**/root/nacos/nacos-k8s-master/deploy/mysql**)**

1. 部署mysql服务

cat pvc.yaml

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: nacos-mysql-pvc namespace: nacos spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi storageClassName: cbs-csimysql的部署文件直接复制了mysql-ceph.yaml的修改了一下:

cat mysql.yaml

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: nacos-mysql-pvc namespace: nacos spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi storageClassName: cbs-csi [root@sh-master-01 mysql]# cat mysql.yaml apiVersion: v1 kind: ReplicationControlle metadata: name: mysql labels: name: mysql spec: replicas: 1 selector: name: mysql template: metadata: labels: name: mysql spec: containers: - name: mysql image: nacos/nacos-mysql:5.7 ports: - containerPort: 3306 env: - name: MYSQL\_ROOT\_PASSWORD value: "root" - name: MYSQL\_DATABASE value: "nacos\_devtest" - name: MYSQL\_USER value: "nacos" - name: MYSQL\_PASSWORD value: "nacos" volumeMounts: - name: mysql-persistent-storage mountPath: /var/lib/mysql subPath: mysql readOnly: false volumes: - name: mysql-persistent-storage persistentVolumeClaim: claimName: nacos-mysql-pvc --- apiVersion: v1 kind: Service metadata: name: mysql labels: name: mysql spec: ports: - port: 3306 targetPort: 3306 selector: name: mysqlkubectl apply -f pvc.yaml kubectl apply -f mysql.yaml -n nacos kubectl get pods -n nacos等待mysql pods running

$kubectl get pods -n nacos NAME READY STATUS RESTARTS AGE mysql-hhs5q 1/1 Running 0 3h51m2. 进入mysql 容器执行初始化脚本

kubectl exec -it mysql-hhs5q bash -n nacos mysql -uroot -p root \*\*\*\*\* create database nacos\_devtest; use nacos\_devtest; ### 我是图省事,把这sql脚本里面直接复制进去搞了... https://github.com/alibaba/nacos/blob/develop/distribution/conf/nacos-mysql.sql ------- 退出mysql控制台,并退出容器 quit; exit4. 部署nacos

从mysql目录 cd ../nacos

cat nacos.yaml

```

apiVersion: v1

kind: Service

metadata:

name: nacos-headless

labels:

app: nacosannotations:

service.alpha.kubernetes.io/tolerate-unready-endpoints: "true"spec:

ports:

- port: 8848 name: serve targetPort: 8848 - port: 7848 name: rpc targetPort: 7848clusterIP: None

selector:

app: nacos

apiVersion: v1

kind: ConfigMap

metadata:

name: nacos-cm

data:

mysql.db.name: “nacos_devtest”

mysql.port: “3306”

mysql.user: “nacos”

mysql.password: “nacos”

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: nacos

spec:

serviceName: nacos-headless

replicas: 3

template:

metadata: labels: app: nacos annotations: pod.alpha.kubernetes.io/initialized: "true" spec: affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: "app" operator: In values: - nacos topologyKey: "kubernetes.io/hostname" initContainers: - name: peer-finder-plugin-install image: nacos/nacos-peer-finder-plugin:1.0 imagePullPolicy: Always volumeMounts: - mountPath: /home/nacos/plugins/peer-finde name: plguindi containers: - name: nacos imagePullPolicy: Always image: nacos/nacos-server:latest resources: requests: memory: "2Gi" cpu: "500m" ports: - containerPort: 8848 name: client-port - containerPort: 7848 name: rpc env: - name: NACOS\_REPLICAS value: "2" - name: SERVICE\_NAME value: "nacos-headless" - name: DOMAIN\_NAME value: "layabox.daemon" - name: POD\_NAMESPACE valueFrom: fieldRef: apiVersion: v1 fieldPath: metadata.namespace - name: MYSQL\_SERVICE\_DB\_NAME valueFrom: configMapKeyRef: name: nacos-cm key: mysql.db.name - name: MYSQL\_SERVICE\_PORT valueFrom: configMapKeyRef: name: nacos-cm key: mysql.port - name: MYSQL\_SERVICE\_USER valueFrom: configMapKeyRef: name: nacos-cm key: mysql.use - name: MYSQL\_SERVICE\_PASSWORD valueFrom: configMapKeyRef: name: nacos-cm key: mysql.password - name: NACOS\_SERVER\_PORT value: "8848" - name: NACOS\_APPLICATION\_PORT value: "8848"

- 2022-08-09-Operator3-设计一个operator二-owns的使用

- 2022-07-11-Operator-2从pod开始简单operator

- 2021-07-20-Kubernetes 1.19.12升级到1.20.9(强调一下selfLink)

- 2021-07-19-Kubernetes 1.18.20升级到1.19.12

- 2021-07-17-Kubernetes 1.17.17升级到1.18.20

- 2021-07-16-TKE1.20.6搭建elasticsearch on kubernetes

- 2021-07-15-Kubernets traefik代理ws wss应用

- 2021-07-09-TKE1.20.6初探

- 2021-07-08-关于centos8+kubeadm1.20.5+cilium+hubble的安装过程中cilium的配置问题--特别强调

- 2021-07-02-腾讯云TKE1.18初体验