描述背景:

已经搭建完的k8s集群只有两个worker节点,现在增加work节点到集群。原有集群机器:

| ip | 自定义域名 | 主机名 |

| :----: | :----: | :----: |

| | master.k8s.io | k8s-vip |

|192.168.0.195 | master01.k8s.io | k8s-master-01|

|192.168.0.197 | master02.k8s.io | k8s-master-02|

|192.168.0.198 | master03.k8s.io | k8s-master-03|

|192.168.0.199 | node01.k8s.io | k8s-node-01|

|192.168.0.202 | node02.k8s.io | k8s-node-02|

|192.168.0.108 | node03.k8s.io | k8s-node-03|

|192.168.0.111 | node04.k8s.io | k8s-node-04|

|192.168.0.115 | node05.k8s.io | k8s-node-05|

初始集群参照 https://duiniwukenaihe.github.io/2019/09/18/k8s-expanded/ 由于上周kubernetes1.15.3爆出漏洞,决定将集群升级为1.16.0 。

#升级master节点

##k8s-master-01节点操作

yum list --showduplicates kubeadm --disableexcludes=kubernetes #查看yum源中可支持版本

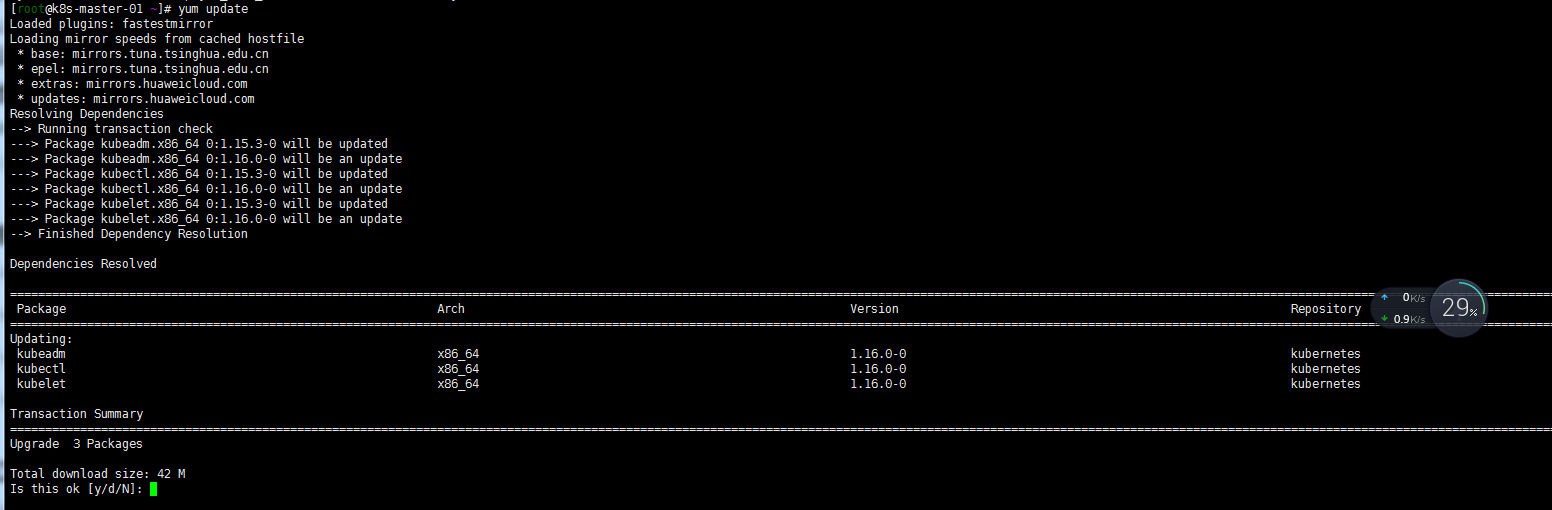

yum install kubeadm-1.16.0 kubectl-1.16.0 --disableexcludes=kubernetes。我这里懒人方式直接yum update了

kubeadm version

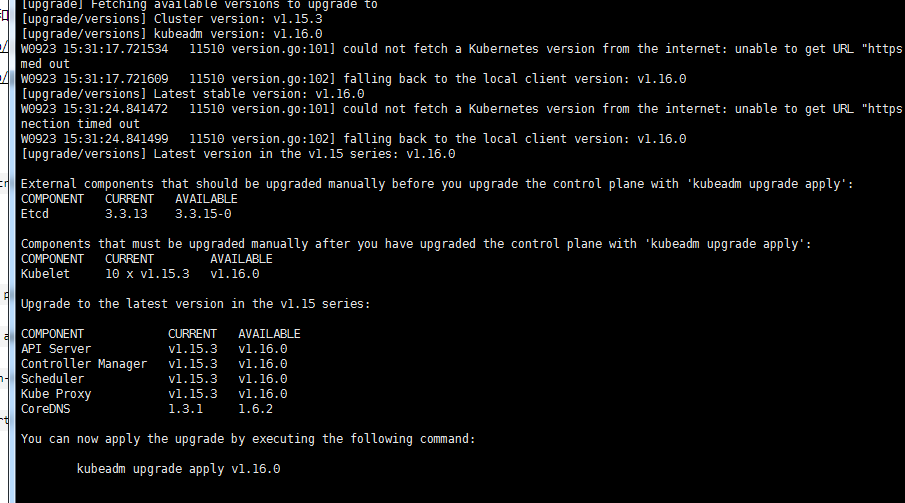

kubeadm upgrade plan --certificate-renewal=false # 如果不加-certificate-renewal=false将重新生成证书

kubeadm upgrade apply v1.16.0

systemctl daemon-reload

systemctl restart kubelet

##发现 master节点一直not ready

参照: https://github.com/coreos/flannel/pull/1181/commits/2be363419f0cf6a497235a457f6511df396685d4

cat <<EOF > /etc/cni/net.d/10-flannel.conflist

{

"name": "cbr0",

"cniVersion": "0.2.0",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

EOF

最好还是修改下kube-flannel.yml

kubectl apply -f kube-flannel.yml

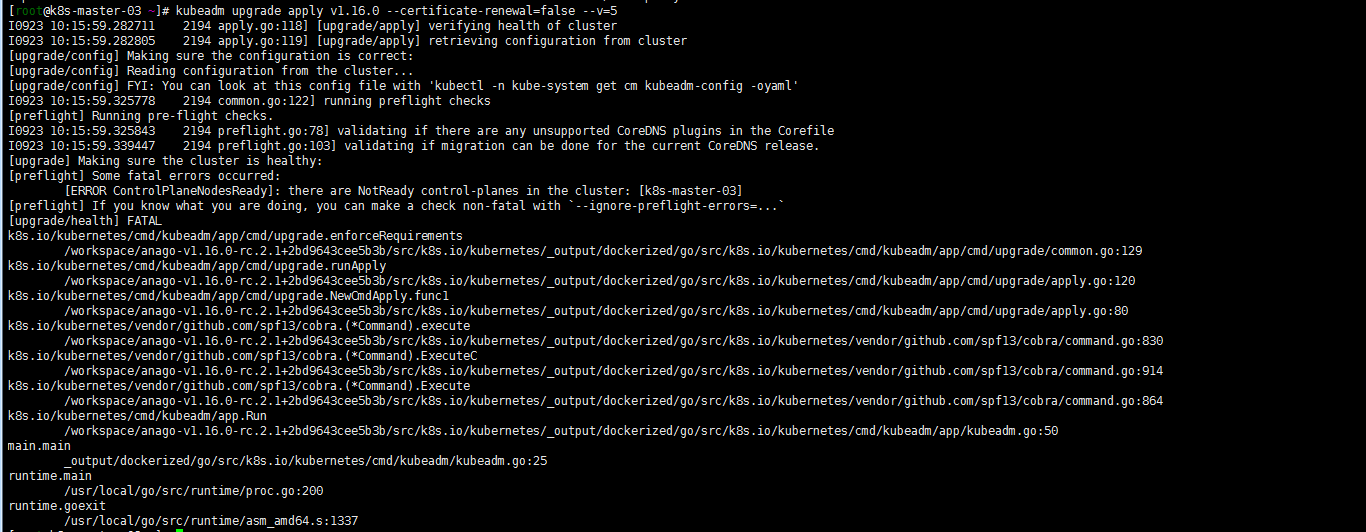

##依次升级其他master02 master03节点

yum install kubeadm-1.16.0 kubectl-1.16.0 --disableexcludes=kubernetes

kubeadm upgrade apply v1.16.0

systemctl daemon-reload

systemctl restart kubelet

##worker nodes 节点升级

#在master01节点依次将worker节点设置为不可调用

kubectl drain $node --ignore-daemonsets

yum install kubeadm-1.16.0 kubectl-1.16.0 --disableexcludes=kubernetes

systemctl daemon-reload

systemctl restart kubelet

systemctl status kubelet

kubectl uncordon $node

work节点以此升级

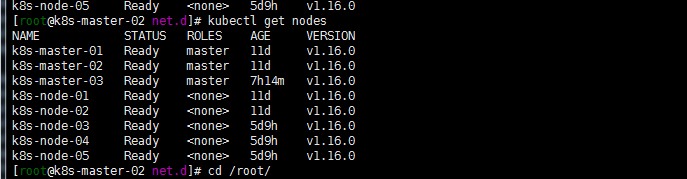

##查看集群##

kubectl get nodes

注:碰到的问题 早前yaml文件apiVersion用的是extensions/v1beta1 ,1,16要修改为apps/v1beta1。具体看https://kubernetes.io/zh/docs/concepts/workloads/controllers/deployment/